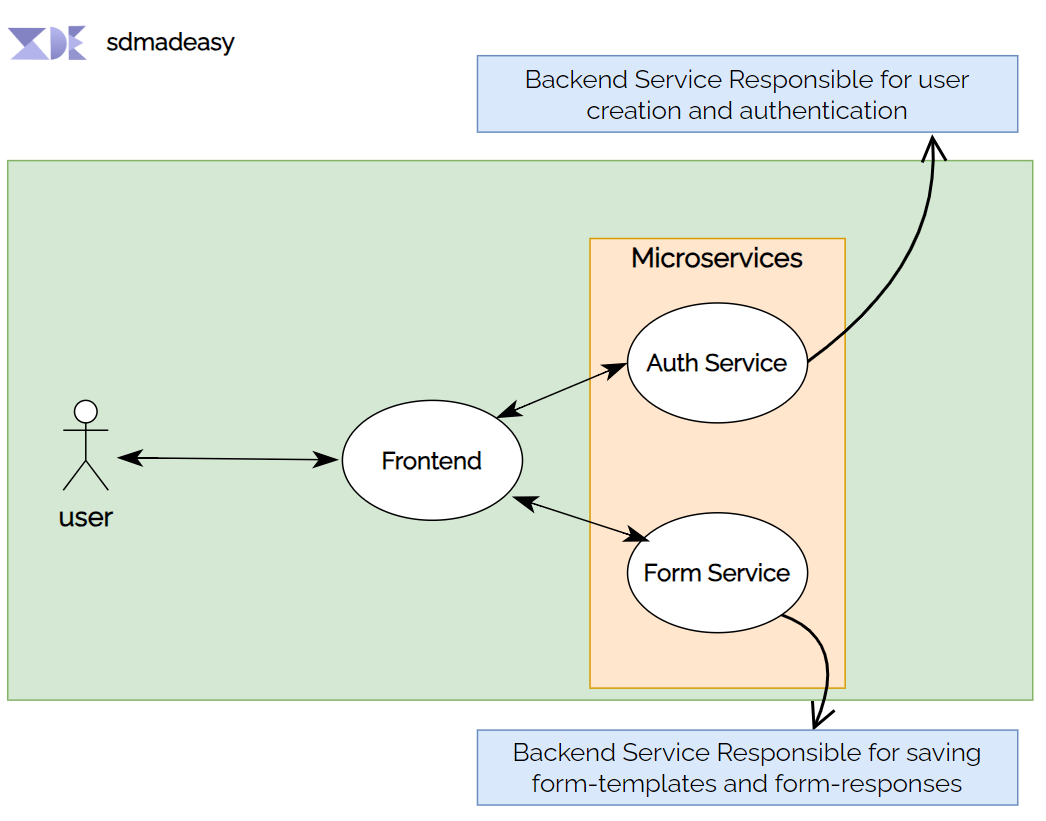

Last year, I developed SAAS (Software as a Service) for an internal team, allowing managers and team leaders to create forms(for collecting feedback,lead generation etc.) from straightforward user interfaces and distribute them throughout the team for a variety of uses.The architecture of our form builder service is simple, but we've identified inefficiencies in terms of cost, security, performance and web service management. To address these issues let's see what we exactly did.

Problem

The current architecture of our form builder service lacks efficiency in terms of cost, security, performance, and web service management.

High Level Overview of Form Builder Service

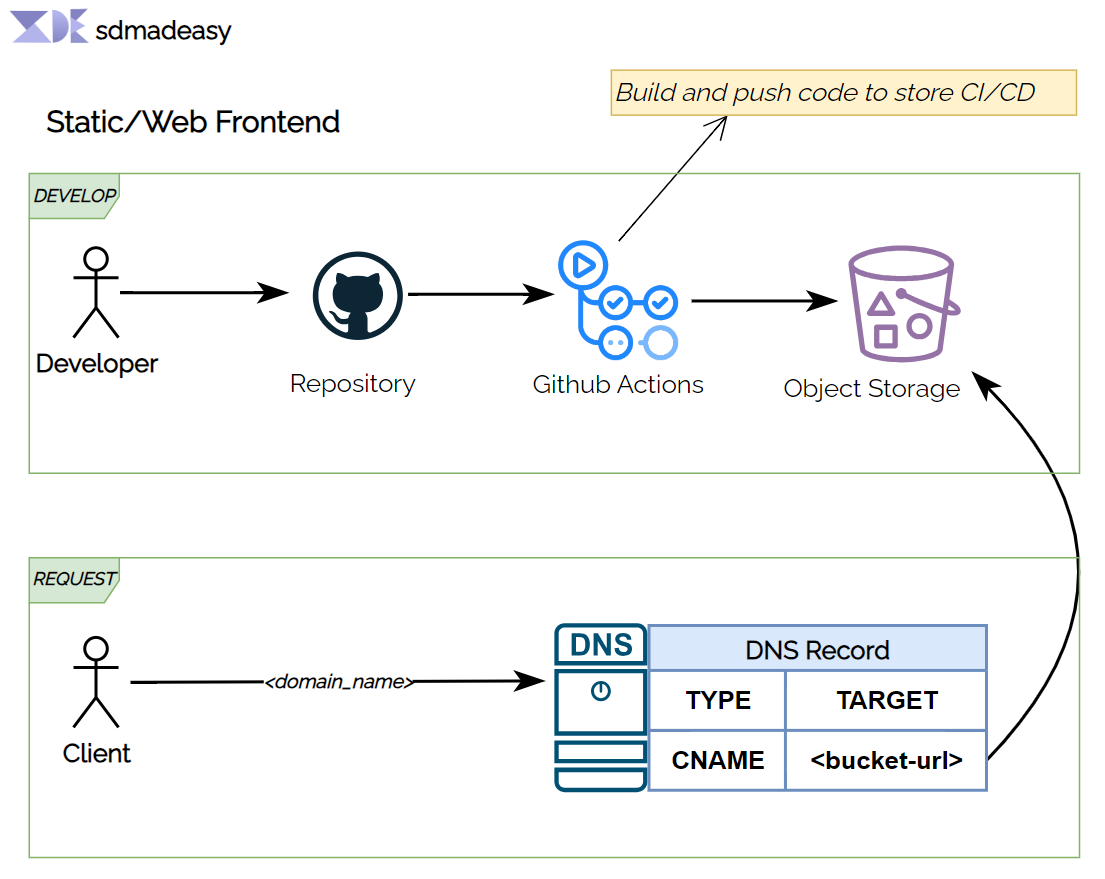

Frontend View of application

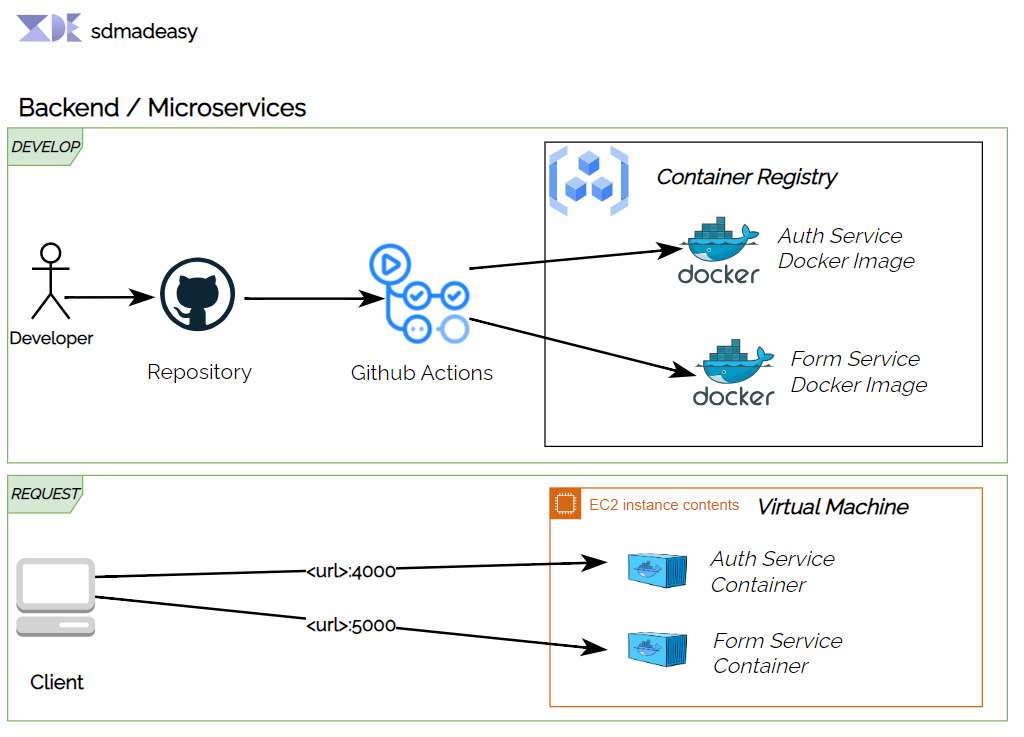

Backend View of application

Let's choose a flaw in this design

-

Limited Caching and Content Delivery: each request made to the services, such as an

S3 bucket or microservices, requires the complete processing of the request and retrieval of data from the source. This can result inincreased latency and server load, especially for frequently accessed or static content. -

Rate Limiting: We can't able to control the number of

incoming requestwhich leads to instability in resource consumptions, scalability challenges, increased security risks, and performance degradation. -

Performance Impact due to SSL termination: SSL/TLS encryption and decryption is computationally expensive, particularly when handling high volumes of traffic. When each microservice is burdened with individual SSL termination responsibilities, it can lead to significant performance degradation for those services.

-

Routing and URL management: Improper

URL managementcan lead to difficulties in identifying the associated services. For instance, if our frontend developer needs to call theauthenticationservice, they would have to use the URLhttp://<host>:4000. Similarly, if they want to interact with theform service, they would need to usehttp://<host>:5000. However, this approach lacks intuitiveness as it doesn't provide clear indications of which service each URL corresponds to.

Solution

Let's see what we need to do in order to solve this issue

Certainly! Using a reverse proxy can help address the mentioned problems.before that let's see what is Reverse Proxy.

What is Reverse Proxy ?

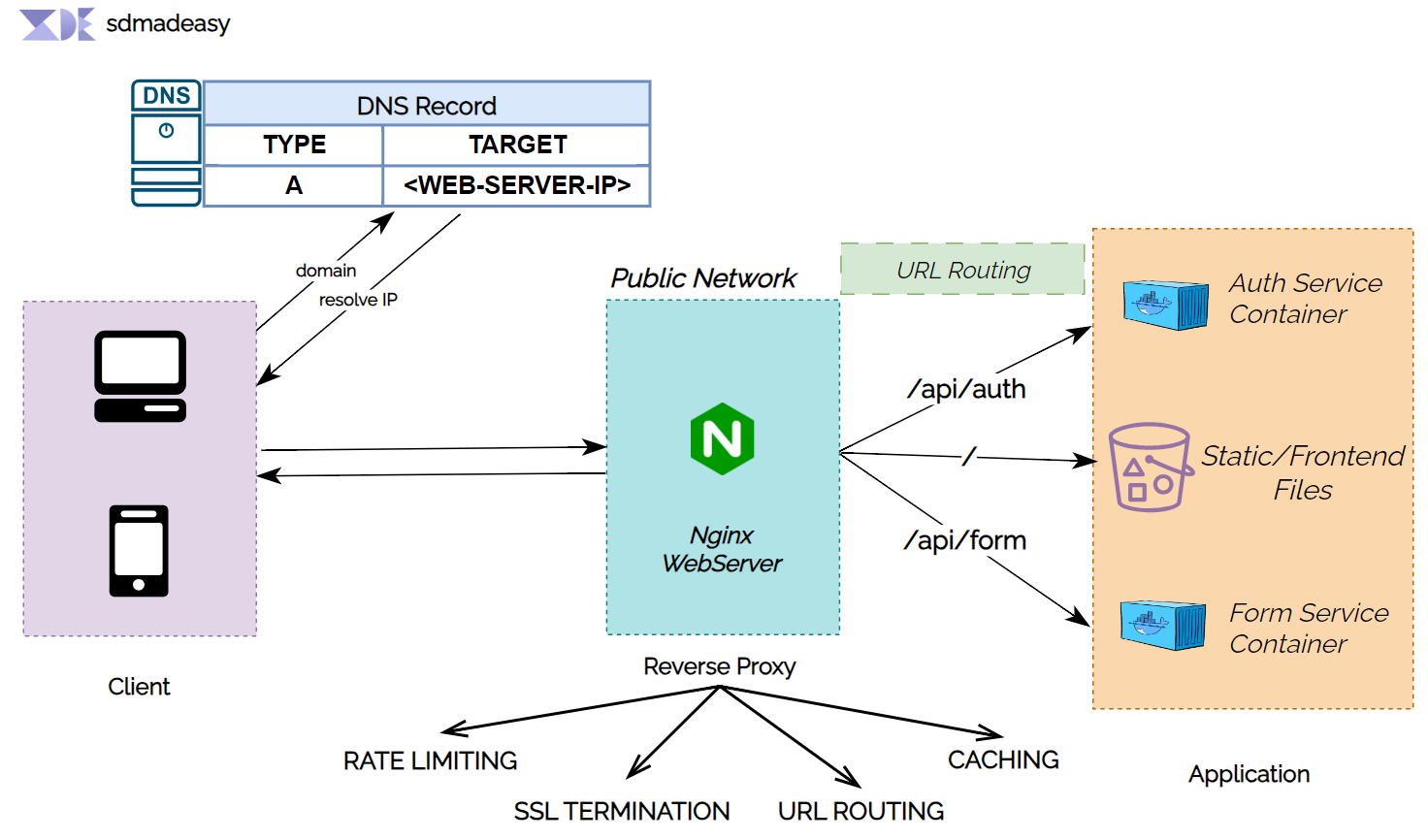

A reverse proxy is an intermediary server or software that stands between client devices, like web browsers, and backend servers such as application servers or microservices. Its purpose is to receive client requests and then forward them to the suitable backend server. When the response is received from the backend server, the reverse proxy sends it back to the client

Let's see how reverse proxy address the issue ?

-

Limited Caching and Content Delivery: A reverse proxy can cache responses from the services, reducing the load on the services and improving response times for frequently accessed or static content. By storing and serving cached responses, the reverse proxy can significantly reduce latency and server load, resulting in improved performance.

-

Rate Limiting: A reverse proxy can implement rate limiting policies to control the number of incoming requests to the services. It can enforce limits on request rates, concurrency, or specific API endpoints. By incorporating rate limiting rules at the reverse proxy layer, you can achieve better resource management, scalability, enhanced security, and prevent performance degradation due to excessive requests.

-

Performance Impact due to SSL termination: A reverse proxy can handle SSL/TLS termination, offloading the computational burden from the microservices. The reverse proxy can handle the encryption and decryption process, allowing the microservices to focus on processing the application logic. This reduces the performance impact of SSL/TLS termination on the microservices, improving their response times and scalability.

-

Routing and URL management: A reverse proxy can act as a centralized routing layer, allowing you to configure and manage URL mappings for the associated services. You can define intuitive and meaningful URLs that correspond to specific services or endpoints. This simplifies URL management and provides clear indications of which service each URL corresponds to, improving developer experience and application maintainability.

In this case we use NGINX as a reverse proxy software.

Reverse Proxy Configuration

- nginx.conf

# Set up caching for S3 bucket

proxy_cache_path /tmp/ levels=1:2 keys_zone=s3_cache:10m max_size=500m inactive=60m use_temp_path=off;

# Define the rate limit zone

limit_req_zone $binary_remote_addr zone=api_rate_limit:10m rate=10r/s;

server {

listen 443 ssl default_server;

listen [::]:443 ssl default_server ;

server_name ${SERVER_NAME};

ssl_certificate /etc/letsencrypt/live/$server_name/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/$server_name/privkey.pem;

set $bucket ${BUCKET_NAME};

sendfile on;

location / {

rewrite /cached(.*) $1 break;

resolver 8.8.8.8;

proxy_cache s3_cache;

proxy_http_version 1.1;

proxy_redirect off;

proxy_set_header Connection "";

proxy_set_header Authorization '';

proxy_set_header Host $bucket;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_hide_header x-amz-id-2;

proxy_hide_header x-amz-request-id;

proxy_hide_header x-amz-meta-server-side-encryption;

proxy_hide_header x-amz-server-side-encryption;

proxy_hide_header Set-Cookie;

proxy_ignore_headers Set-Cookie;

proxy_cache_revalidate on;

proxy_intercept_errors on;

proxy_cache_use_stale error timeout updating http_500 http_502 http_503 http_504;

proxy_cache_lock on;

proxy_cache_valid 200 304 60m;

add_header Cache-Control max-age=31536000;

add_header X-Cache-Status $upstream_cache_status;

proxy_pass https://$bucket; # without trailing slash

}

location /api/auth {

limit_req zone=api_rate_limit burst=20 nodelay;

proxy_pass http://$server_name:4000/;

}

location /api/form {

limit_req zone=api_rate_limit burst=20 nodelay;

proxy_pass http://$server_name:5000/;

}

}

Explanation of NGINX Conf file

The provided NGINX configuration defines a server block that listens on port 443 for HTTPS connections. Let's break down the location blocks within this server block:

-

/ Location Block:

location / {

rewrite /cached(.*) $1 break;

resolver 8.8.8.8;

proxy_cache s3_cache;

proxy_http_version 1.1;

proxy_redirect off;

proxy_set_header Connection "";

proxy_set_header Authorization '';

proxy_set_header Host $bucket;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_hide_header x-amz-id-2;

proxy_hide_header x-amz-request-id;

proxy_hide_header x-amz-meta-server-side-encryption;

proxy_hide_header x-amz-server-side-encryption;

proxy_hide_header Set-Cookie;

proxy_ignore_headers Set-Cookie;

proxy_cache_revalidate on;

proxy_intercept_errors on;

proxy_cache_use_stale error timeout updating http_500 http_502 http_503 http_504;

proxy_cache_lock on;

proxy_cache_valid 200 304 60m;

add_header Cache-Control max-age=31536000;

add_header X-Cache-Status $upstream_cache_status;

proxy_pass https://$bucket; # without trailing slash

}This location block handles requests to the root URL ("/"). It acts as a reverse proxy for an S3 bucket by caching the responses in the specified cache path (

/tmp/in this case). Theproxy_cachedirective sets the cache zone name as "s3_cache" and defines the cache properties like maximum size, inactive duration, etc.The block sets several proxy headers (

proxy_set_header) and hides certain response headers (proxy_hide_header) to modify the request and response handling. It also configures cache-related directives such asproxy_cache_revalidate,proxy_intercept_errors,proxy_cache_use_stale,proxy_cache_lock,proxy_cache_valid, etc. Theadd_headerdirectives add custom headers to the response.The

proxy_passdirective specifies the backend server to which the requests will be forwarded. In this case, it uses the HTTPS protocol and the$bucketvariable contains the name of the S3 bucket. The trailing slash is intentionally omitted. -

/api/auth Location Block:

location /api/auth {

limit_req zone=api_rate_limit burst=20 nodelay;

proxy_pass http://$server_name:4000/;

}This location block handles requests to the "/api/auth" endpoint. It applies a rate limit using the

limit_reqdirective, allowing a burst of 20 requests with no delay. The requests are then reverse proxied to the specified backend server using the HTTP protocol and the$server_namevariable, with the port 4000. -

/api/form Location Block:

location /api/form {

limit_req zone=api_rate_limit burst=20 nodelay;

proxy_pass http://$server_name:5000/;

}This location block handles requests to the "/api/form" endpoint. Similar to the previous location block, it applies a rate limit and reverse proxies the requests to another backend server using the HTTP protocol and the

$server_namevariable, with the port 5000.

These location blocks define the different URL paths and their corresponding backend servers or S3 bucket for reverse proxying and handling incoming